I thought the earliest sunset occurs on the winter solstice. That's not

true. As you can see here, the sun set at 5 PM for the first half of

December, and started setting later after that. It will continue to rise

later every day, until a plateau in early January.

Saturday, December 20, 2014

Monday, December 01, 2014

Music visualization using an RGB lamp

I wrote Winamp and Audacious music visualization plugins for my RGB lamp. The code is published on GitHub. Pitch is represented by colour, and loudness is represented by intensity. This post explains the method in detail.

The code starts with an array of FFT bin values. This is provided by the music player via its visualization plugin API. If only waveform data was available, the FFT could be computed using the FFTW library.

Each value in that array corresponds to sound intensity in a particular range of frequencies. Those ranges are all of equal width in hertz, but humans perceive pitch in a roughly logarithmic way. This means perceived pitch varies a lot between entries at the start of the array, and a little at the end of the array. This is handled by makeramps.rb. The frequency at the midpoint of each bin is mapped to pitch using the MIDI pitch formula. This allows pitch to be mapped to colour in a sort of linear way. Here's how the various FFT bins sum into the different colours:

The lowest frequency bin sums to red. Increasing frequencies sum less into red and more into green. The pitch midpoint sums into green. Then increasing frequencies sum less into green and more into blue. Finally, the highest frequency sums into blue. The code uses a single table called green_tab, with values corresponding to the fraction of the bin that is to be summed into green. Before the pitch midpoint, one minus the table value times the bin is summed into red, and after the midpoint the same is summed into blue.

Before summing, bins can be scaled based on human ear audio sensitivity at that frequency. This ensures that frequencies where the human ear is less sensitive make less of a contribution. I found an ISO 226 Equal-Loudness-Level Contour Signal script for MATLAB which can run in GNU Octave. This isn't very important though.

When summing bins, it is important to sum power, not amplitude. Summing amplitudes does not make sense, but fortunately they are easy to convert to power: just square them before summing. Afterwards, they are converted back to amplitude via square root, because they are to be used for setting PWM values.

If the output of this algorithm was used to directly set lamp brightness, there would be a lot of rapid brightness changes. I find this unpleasant, so I added some smoothing. The code calculates an exponential moving average for each colour, but with more smoothing for decreases and less smoothing for increases. The faster response to increases ensures the lamp will appear responsive to loud sounds.

After all this, the colours still did not appear to be in balance. To fix this, I calibrated using pink noise, and created scale factors for red and blue which cause white light when playing pink noise. It may be better to calibrate using music, but that would be more difficult and the resulting calibration might be biased toward the music used to calibrate it.

I'm posting about this because of how I'm satisfied with the end result. I haven't done any tweaking of this algorithm in many months, and I still really like the effect.

Recently I have been experimenting with an on-screen visualization plugin inspired by this. Each moment is displayed on a horizontal line with stereo determining horizontal position. Pitch sets colour, intensity sets brightness, and very intense sounds cause a wider area to be coloured. Old data scrolls down. This part is still a work in progress, and I haven't published that code yet.

The code starts with an array of FFT bin values. This is provided by the music player via its visualization plugin API. If only waveform data was available, the FFT could be computed using the FFTW library.

Each value in that array corresponds to sound intensity in a particular range of frequencies. Those ranges are all of equal width in hertz, but humans perceive pitch in a roughly logarithmic way. This means perceived pitch varies a lot between entries at the start of the array, and a little at the end of the array. This is handled by makeramps.rb. The frequency at the midpoint of each bin is mapped to pitch using the MIDI pitch formula. This allows pitch to be mapped to colour in a sort of linear way. Here's how the various FFT bins sum into the different colours:

The lowest frequency bin sums to red. Increasing frequencies sum less into red and more into green. The pitch midpoint sums into green. Then increasing frequencies sum less into green and more into blue. Finally, the highest frequency sums into blue. The code uses a single table called green_tab, with values corresponding to the fraction of the bin that is to be summed into green. Before the pitch midpoint, one minus the table value times the bin is summed into red, and after the midpoint the same is summed into blue.

Before summing, bins can be scaled based on human ear audio sensitivity at that frequency. This ensures that frequencies where the human ear is less sensitive make less of a contribution. I found an ISO 226 Equal-Loudness-Level Contour Signal script for MATLAB which can run in GNU Octave. This isn't very important though.

When summing bins, it is important to sum power, not amplitude. Summing amplitudes does not make sense, but fortunately they are easy to convert to power: just square them before summing. Afterwards, they are converted back to amplitude via square root, because they are to be used for setting PWM values.

If the output of this algorithm was used to directly set lamp brightness, there would be a lot of rapid brightness changes. I find this unpleasant, so I added some smoothing. The code calculates an exponential moving average for each colour, but with more smoothing for decreases and less smoothing for increases. The faster response to increases ensures the lamp will appear responsive to loud sounds.

After all this, the colours still did not appear to be in balance. To fix this, I calibrated using pink noise, and created scale factors for red and blue which cause white light when playing pink noise. It may be better to calibrate using music, but that would be more difficult and the resulting calibration might be biased toward the music used to calibrate it.

I'm posting about this because of how I'm satisfied with the end result. I haven't done any tweaking of this algorithm in many months, and I still really like the effect.

Recently I have been experimenting with an on-screen visualization plugin inspired by this. Each moment is displayed on a horizontal line with stereo determining horizontal position. Pitch sets colour, intensity sets brightness, and very intense sounds cause a wider area to be coloured. Old data scrolls down. This part is still a work in progress, and I haven't published that code yet.

Wednesday, November 26, 2014

Trying out a mini logic analyzer

I just got a small and inexpensive logic analyzer from dx.com. Basically, it contains a CY7C68013A microcontroller and 74HC245 buffer chip, and you can upload firmware which makes it into a logic analyzer.

I used sigrok PulseView. First I tried it in Windows. It's easy to install the driver using the included Zadig executable. After that, PulseView tended to crash on startup if the logic analyzer was connected. I only got it to run a few times. Also, it seemed to lock up sometimes when capturing for a second or third time. I expect this is due to bugs in the Windows version of PulseView, and not due to a problem with the hardware.

Here's a decode of a NEC protocol IR remote. I couldn't get it to work at the default 20 kHz sample rate, even though that should have been fast enough. It worked at 100 kHz.

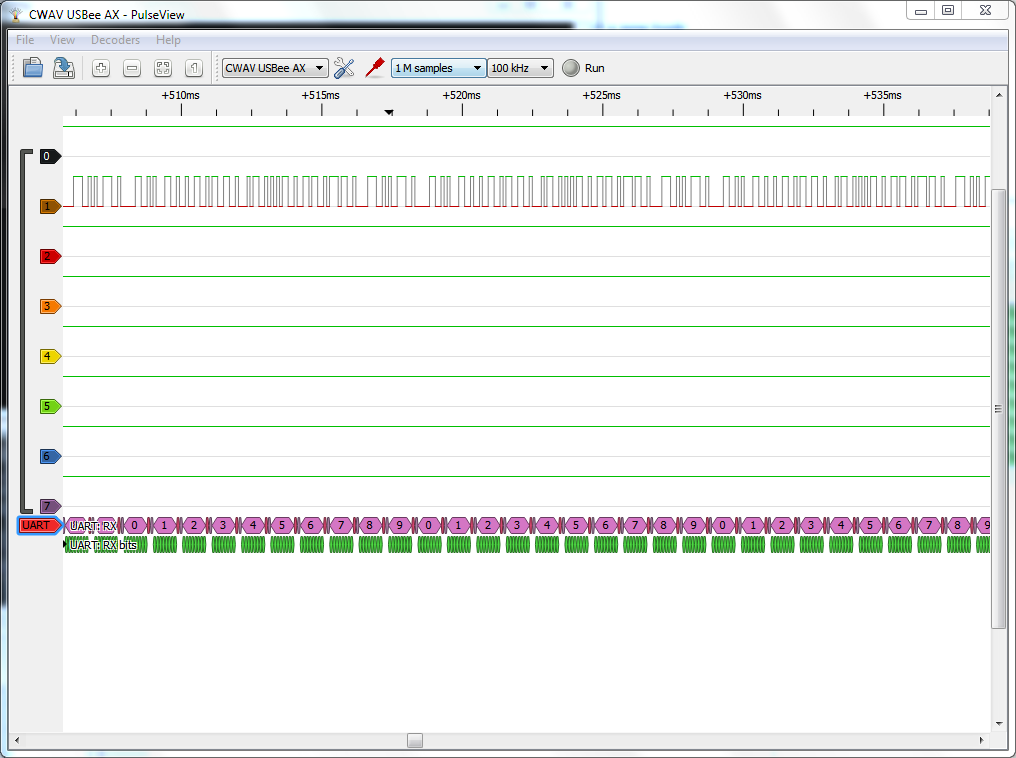

Here's some 9600 baud serial communication. This is also at 100 kHz. It also worked at 20 kHz sampling if I set the decoder baud rate to 10000. Problems with 20 kHz are understandable though, because it's just a bit over twice the baud rate.

After this I rebooted into Linux because the Windows problems were getting too annoying. Installing sigrok was easy, because a Ubuntu package was available. I didn't have any problems in Linux. Here's some SPI. The Stellaris LaunchPad is communicating with an nRF24L01+ module and writing to some registers. This is at the maximum sample rate of 24 MHz.

As you can see, with a small piece of hardware you can order from China for $10 and some free software, you can have a 24 MHz logic analyzer which will decode protocols for you. That's truly impressive!

I used sigrok PulseView. First I tried it in Windows. It's easy to install the driver using the included Zadig executable. After that, PulseView tended to crash on startup if the logic analyzer was connected. I only got it to run a few times. Also, it seemed to lock up sometimes when capturing for a second or third time. I expect this is due to bugs in the Windows version of PulseView, and not due to a problem with the hardware.

Here's a decode of a NEC protocol IR remote. I couldn't get it to work at the default 20 kHz sample rate, even though that should have been fast enough. It worked at 100 kHz.

Here's some 9600 baud serial communication. This is also at 100 kHz. It also worked at 20 kHz sampling if I set the decoder baud rate to 10000. Problems with 20 kHz are understandable though, because it's just a bit over twice the baud rate.

After this I rebooted into Linux because the Windows problems were getting too annoying. Installing sigrok was easy, because a Ubuntu package was available. I didn't have any problems in Linux. Here's some SPI. The Stellaris LaunchPad is communicating with an nRF24L01+ module and writing to some registers. This is at the maximum sample rate of 24 MHz.

As you can see, with a small piece of hardware you can order from China for $10 and some free software, you can have a 24 MHz logic analyzer which will decode protocols for you. That's truly impressive!

Saturday, November 15, 2014

If nRF24L01 modules don't work, add decoupling capacitors

I got two nRF24L01+ modules and tried to establish communication between the MSP430 and Stellaris LaunchPads. SPI communication worked, and I was able to read and write registers, but all attempts at wireless communication were a total failure. I even tried a kind of promiscuous mode, where I was getting data, but it all seemed to be garbage with no trace of the packets I was transmitting. Something was definitely being transmitted according to the chip's received power detector, though it was suspiciously spread out onto adjacent channels.

After wasting a few hours trying to figure out what was wrong with the software and finally taking a break, I got the idea to try adding bypass capacitors. They made everything work. The modules do have C8 and C9 which are supposed to be 1 nF and 10 nF respectively, but those are clearly not enough when the power supply wires are long. I just used some small electrolytic capacitors I had around, 56 µF on one module and 33 µF on another. Tantalum capacitors would be a better choice because of their smaller size and good high frequency performance.

After wasting a few hours trying to figure out what was wrong with the software and finally taking a break, I got the idea to try adding bypass capacitors. They made everything work. The modules do have C8 and C9 which are supposed to be 1 nF and 10 nF respectively, but those are clearly not enough when the power supply wires are long. I just used some small electrolytic capacitors I had around, 56 µF on one module and 33 µF on another. Tantalum capacitors would be a better choice because of their smaller size and good high frequency performance.

Saturday, November 08, 2014

Chain booting Linux from Windows using Darwin chain0

EasyBCD can create a Windows boot menu entry for Linux, using GRUB installed in its partition. This copies the boot sector of the selected partition to C:\NST\nst_linux.mbr and sets up an entry to boot via that file. This works, but it can stop working if upgrades are installed in Linux and the boot sector changes. In that case, the boot sector needs to be copied to that file again. Deleting and re-creating the menu entry in EasyBCD will accomplish that.

It would be better to read the boot sector directly, but I don't think Windows supports that. Another alternative is to use a boot sector file which finds and loads the real boot sector. This approach is used when loading Mac OS X or Darwin via chain0. You can obtain a version of chain0 at C:\NST\nst_mac.mbr by setting up a Mac OS X MBR boot entry in EasyBCD. The version I have has an MD5 value of cfca64f400ef99e89b51e59bcb697137. I patched it to search for the Linux partition instead of OS X and used that version as nst_linux.mbr:

It can search for three different partition types, but I only need to search for one so I set them all to 83 hex. This can successfully boot Linux from the first partition in my extended partition. If you have a more complicated setup, you may need a different version of chain0 with a fix for accessing other partitions in the extended partition.

Download the modified chain0 here.

It would be better to read the boot sector directly, but I don't think Windows supports that. Another alternative is to use a boot sector file which finds and loads the real boot sector. This approach is used when loading Mac OS X or Darwin via chain0. You can obtain a version of chain0 at C:\NST\nst_mac.mbr by setting up a Mac OS X MBR boot entry in EasyBCD. The version I have has an MD5 value of cfca64f400ef99e89b51e59bcb697137. I patched it to search for the Linux partition instead of OS X and used that version as nst_linux.mbr:

C:\NST>fc /b nst_mac.mbr nst_linux.mbr

Comparing files nst_mac.mbr and NST_LINUX.MBR

0000008A: AB 83

00000090: A8 83

00000096: AF 83

It can search for three different partition types, but I only need to search for one so I set them all to 83 hex. This can successfully boot Linux from the first partition in my extended partition. If you have a more complicated setup, you may need a different version of chain0 with a fix for accessing other partitions in the extended partition.

Download the modified chain0 here.

Yellowish light seems brighter, and bright bluish light is more annoying

The brightness of the 100W cool white LED is confusing. After being for a while in a room illuminated by it, the room seems quite dim. Going away and coming back shows that the room is in fact very bright. A 300W halogen torchiere is probably dimmer than the LED, but the room seems brighter when lit that way, and that feeling of brightness doesn't go away.

The LED array itself seems extremely annoyingly bright. It is so bad that I can't stand it even in the farthest peripheral vision, and I will walk sideways with my back turned to it to avoid seeing it. The effect reminds me of HID headlights, but it's even worse. It's much worse than the sun or high power halogen lights.

I'm also reminded of how brown sunglasses affect perceived brightness. Their darkened lenses obviously decrease real brightness. However, on a bright sunny day they only seem to reduce the unpleasant aspects of excessive brightness, and they can even increase perceived pleasant brightness.

This gives me some ideas. Does yellowish light trigger pleasant feelings of brightness, and does bluish light trigger unpleasant feelings of excessive brightness? Does yellowish light constrict pupils less than bluish light?

The LED array itself seems extremely annoyingly bright. It is so bad that I can't stand it even in the farthest peripheral vision, and I will walk sideways with my back turned to it to avoid seeing it. The effect reminds me of HID headlights, but it's even worse. It's much worse than the sun or high power halogen lights.

I'm also reminded of how brown sunglasses affect perceived brightness. Their darkened lenses obviously decrease real brightness. However, on a bright sunny day they only seem to reduce the unpleasant aspects of excessive brightness, and they can even increase perceived pleasant brightness.

This gives me some ideas. Does yellowish light trigger pleasant feelings of brightness, and does bluish light trigger unpleasant feelings of excessive brightness? Does yellowish light constrict pupils less than bluish light?

Friday, November 07, 2014

Trying out a cheap Chinese 100W LED array

Recently I found that Chinese 100W LED arrays cost less than $5 on eBay, so I had to try one out. The LED array arrived in less than a month in a padded envelope with no other protection. This is not right, because white LEDs are static sensitive, but I guess you can't expect much for $5, and the LED works. Here's the LED at very low power:

Note how the LEDs have unequal brightness. One whole row is brighter, presumably because the dark LED in that row has greater forward leakage and a low voltage drop across it. This all looks bad, but it's normal at low power. Using higher current and a low PWM duty cycle would probably produce more uniform results, if that was needed.

The biggest heat sink I had was a Slot-A Athlon heat sink. After mounting it the first time, without paste just to check the fit, I could see light between the LED and the heat sink. There were high spots at the plastic-filled holes and slots in the LED's metal plate. I don't think the plastic was high; it seems more like the metal was distorted by punching operations. The whole thing was also warped on a larger scale. After sanding it with fine sandpaper on a piece of glass, I got a much better fit and mounted it.

Note how the LEDs have very similar brightness now at higher power. The LED is a bit dirty from Brasso, which was probably unnecessary. Fine sandpaper was good enough.

Then there was the question of how to drive the LED. I have a power supply from an old Fujitsu SMD hard drive, with -12V and +24V outputs, giving 36V, which is more than enough for the LED. I set up some primitive linear current regulation, using a 0.22 ohm resistor and Vbe of a small transistor. The transistor controlled the gate of a power MOSFET with a big heat sink. This works surprisingly well, though note that Vbe changes with temperature. It regulated the current to 2.7A and I measured 35V accross the LED.

The heat sink required some serious airflow to stay cool enough that I could keep my finger on it indefinitely near the LED. Fortunately that same SMD hard drive had two 8 cm fans, to which I added some cardboard ducting to concentrate the air. The power supply even monitors the fans, not via RPM but via thermistors which are cooled by airflow and heated by resistors.

The light coming from the LED is surprisingly hot. My fingers quickly feel burning hot within a few centimetres of the light emitting face, and heat can be felt much further away. It's not the soothing heat of an incandescent, but something more like the feeling of steam escaping from a pot. I guess that's because the infrared light from incandescent bulbs penetrates deeper than visible light.

The LED is also incredibly annoying to look at, and worse than the sun or even badly aimed HID headlights. It's totally unacceptable to have this LED even in the farthest part of my peripheral vision. At the same time, the room doesn't really feel very bright.

In the past I was thinking of retrofitting a powerful LED into a halogen torchiere which had a bad bulb socket. I didn't do it because I wasn't sure I could deal with the heat in an acceptable way. Two high speed fans are fine for experimentation, but a light I use every day should be fanless or at best have a slow speed fan. Instead I ended up fixing the torchiere, making new socket contacts from brass pluming screws.

Comparing light from the 300W halogen torchiere and the 100W LED operating at around 90W, I definitely prefer the torchiere. It makes the room seem brighter even though the LED might actually be a bit brighter. It's hard to compare brightness due to different colours and lamp positions, but I don't think this LED is capable of 9000 to 10000 lumens at 3A. The LED's colour is also somewhat weird. Colours which would normally seem close to white seem yellowish or purplish.

Overall, I'm not too impressed. The light I'm getting doesn't make me want to create a more permanent lamp or flashlight using this LED. It was fun to play with though, and certainly worth the $5.

Note how the LEDs have unequal brightness. One whole row is brighter, presumably because the dark LED in that row has greater forward leakage and a low voltage drop across it. This all looks bad, but it's normal at low power. Using higher current and a low PWM duty cycle would probably produce more uniform results, if that was needed.

The biggest heat sink I had was a Slot-A Athlon heat sink. After mounting it the first time, without paste just to check the fit, I could see light between the LED and the heat sink. There were high spots at the plastic-filled holes and slots in the LED's metal plate. I don't think the plastic was high; it seems more like the metal was distorted by punching operations. The whole thing was also warped on a larger scale. After sanding it with fine sandpaper on a piece of glass, I got a much better fit and mounted it.

Note how the LEDs have very similar brightness now at higher power. The LED is a bit dirty from Brasso, which was probably unnecessary. Fine sandpaper was good enough.

Then there was the question of how to drive the LED. I have a power supply from an old Fujitsu SMD hard drive, with -12V and +24V outputs, giving 36V, which is more than enough for the LED. I set up some primitive linear current regulation, using a 0.22 ohm resistor and Vbe of a small transistor. The transistor controlled the gate of a power MOSFET with a big heat sink. This works surprisingly well, though note that Vbe changes with temperature. It regulated the current to 2.7A and I measured 35V accross the LED.

The heat sink required some serious airflow to stay cool enough that I could keep my finger on it indefinitely near the LED. Fortunately that same SMD hard drive had two 8 cm fans, to which I added some cardboard ducting to concentrate the air. The power supply even monitors the fans, not via RPM but via thermistors which are cooled by airflow and heated by resistors.

The light coming from the LED is surprisingly hot. My fingers quickly feel burning hot within a few centimetres of the light emitting face, and heat can be felt much further away. It's not the soothing heat of an incandescent, but something more like the feeling of steam escaping from a pot. I guess that's because the infrared light from incandescent bulbs penetrates deeper than visible light.

The LED is also incredibly annoying to look at, and worse than the sun or even badly aimed HID headlights. It's totally unacceptable to have this LED even in the farthest part of my peripheral vision. At the same time, the room doesn't really feel very bright.

In the past I was thinking of retrofitting a powerful LED into a halogen torchiere which had a bad bulb socket. I didn't do it because I wasn't sure I could deal with the heat in an acceptable way. Two high speed fans are fine for experimentation, but a light I use every day should be fanless or at best have a slow speed fan. Instead I ended up fixing the torchiere, making new socket contacts from brass pluming screws.

Comparing light from the 300W halogen torchiere and the 100W LED operating at around 90W, I definitely prefer the torchiere. It makes the room seem brighter even though the LED might actually be a bit brighter. It's hard to compare brightness due to different colours and lamp positions, but I don't think this LED is capable of 9000 to 10000 lumens at 3A. The LED's colour is also somewhat weird. Colours which would normally seem close to white seem yellowish or purplish.

Overall, I'm not too impressed. The light I'm getting doesn't make me want to create a more permanent lamp or flashlight using this LED. It was fun to play with though, and certainly worth the $5.

Tuesday, November 04, 2014

Craig CVD601 Android stick

I got a Craig CVD601 Android stick at the XS Cargo closing sale for $30. Android devices which are designed to be hooked up to a TV interested me, but I didn't have enough faith in the idea to actually order one. This was cheap and a good deal even compared to ordering from China, so I decided to try it out.

The device came with Android 4.1 Jelly Bean and worked, but the WiFi is terrible. At the same location, my laptop gets a good signal but the CVD601 can only occasionally connect with a terrible transfer rate. Best results were on channel 2, but even that was not usable. I created an access point on my laptop, but that's not a permanent solution.

Rooting

I quickly decided that rooting is necessary, because I don't want to keep running into obstacles where I can't do something because I don't have root access. This wasted a lot of time because various methods I tried didn't work. Some rely on security bugs which were fixed in earlier versions, or are designed to only work with particular devices. Eventually I found Cydia Impactor, which rooted the device quickly and easily. I used Impactor_0.9.14.zip, with MD5 162761dcbe0b2c0ac08cfb86dea8d715. Then I manually installed SuperSU.After rooting, I edited /system/default.prop, removing tethering and developer_options from ro.wmt.ui.settings_remove to enable those settings. ADB access was available before, but this makes some things more convenient. I will also enable Ethernet settings when I get the USB adapter. Note that /system is normally mounted read-only, so it needs to be remounted via "mount -o remount,rw /system" before making changes. When done with changes, use "mount -o remount,ro /system" to make it read-only again.

Reverse tethering

Reverse tethering provides better network performance than wireless, but I had too much trouble getting it started, and I don't recommend wasting time on this. First, tethering needs to be enabled. If the option is greyed out, enable USB debugging first. Then, on the Android side, the rndis0 interface needs to be reconfigured. I never got "netcfg rndis0 dhcp" working, so I had to configure manually, with ifconfig and route. Windows contains the driver but requires an INF file. I used Microsoft's template customized with USB\VID_18D1&PID_0003.Google Play

I also decided I had to install Google Play Store, because many apps are only available there. It's possible to download APK files and then install them, but that makes things more complicated. If simply installed like any other app, Play Store runs fine at first but crashes as soon as I try to download anything. After that, it keeps crashing on startup. This is because it doesn't have permission to install apps. The solution is installing it as a system app, by copying its APK to the /system/app folder with chmod 644. The app will also crash if its version is incompatible with the version of Google Play Services that is already installed. I installed FirmwareInstall/GoogleApp/system/app/Vending.apk from cvd601_602_firmware4.1.zip available on the Craig site. Then it updated both the store and the services app to the latest version, and everything worked fine after that.Internals and the WiFi issue

Due to the WiFi problem and my curiosity I decided to open up the device:Here is a closeup of the RTL8188ETV based WiFi module. It is a USB device, but with 3.3V power. You can find PDF documentation for similar devices online. The pins below and to the left connect to the antenna. Their top narrow part is spring loaded.

Here is the antenna, note the indentations from where the pins connected. The left black part of the sticker has no metal underneath, and the right part is one solid sheet of metal. Antenna and ground are shorted together, with only that little slot between them. I wonder what kind of antenna this is, and how it works. RF seems like magic sometimes.

After experimenting with various wires connected to the pins, I found I got the best results with two quarter-wavelength wires placed 90 degrees apart. Then I cut the sticker in half lengthwise and glued the parts back on 90 degrees apart. This gave me a reliable connection to the router which was good enough for the web at least, but it didn't work the next day. Then it worked again after I squeezed the device near where the pins are. Maybe the pins don't make good contact? In any case, I don't want to waste more time on this, so I'll wait until I get the USB Ethernet adapter.

Kernel source and config

The 3.0.8 Linux kernel for WM8850 is available on GitHub. It includes binary modules from WonderMedia. The configuration file can be extracted from the kernel on the device. Here is the kernel config for your convenience. The kernels in the boot image, recovery image and cvd601_602_firmware4.1.zip are identical.Friday, October 03, 2014

Booting GRUB from a logical drive via the extended partition

The master boot record (MBR) contains code which is loaded at boot time, and a table which can list up to 4 partitions. Any data partitions created here are called primary partitions, and booting from them should be possible. One of those partitions can instead be an extended partition, which contains the same type of table, with up to 4 partitions. Partitions inside the extended partition are called logical drives, and it may not be possible to boot from those.

I installed Linux on a logical drive. Due to known problems installing Windows service packs when GRUB is in the MBR, I refused to put GRUB there and instead put it in the logical drive containing Linux. GRUB was normally loaded by the Windows 7 bootloader.

Now I wanted to boot directly into GRUB, so I can use it to hide and unhide partitions and select between two versions of Windows. Making just the logical drive with Linux active did not work. The result was as if there is no active partition. It is possible to make the extended partition active, but grub-install refuses to install there, probably because grub-probe can't figure out the mapping for it.

I solved this by copying the code from the Linux logical drive boot sector to the first sector of the extended partition. It was simple, via "

GRUB's first stage code doesn't care from where it's loaded. However, it does contain hard-coded sector locations, and if that needs to change it would have to be copied again in the same way.

I installed Linux on a logical drive. Due to known problems installing Windows service packs when GRUB is in the MBR, I refused to put GRUB there and instead put it in the logical drive containing Linux. GRUB was normally loaded by the Windows 7 bootloader.

Now I wanted to boot directly into GRUB, so I can use it to hide and unhide partitions and select between two versions of Windows. Making just the logical drive with Linux active did not work. The result was as if there is no active partition. It is possible to make the extended partition active, but grub-install refuses to install there, probably because grub-probe can't figure out the mapping for it.

I solved this by copying the code from the Linux logical drive boot sector to the first sector of the extended partition. It was simple, via "

sudo dd if=linux_logical_drive_partition bs=446 count=1 of=extended_partition". The code is 446 bytes long, starting at the beginning of the sector. The rest of the destination sector contains information about partitions, which must not be overwritten. It is extremely important to use the right device names here. (They will typically be something like /dev/sda5, with the logical drive having a higher number than the extended partition.) Mistakes in dd commands writing to raw disk devices can cause unrecoverable data loss.GRUB's first stage code doesn't care from where it's loaded. However, it does contain hard-coded sector locations, and if that needs to change it would have to be copied again in the same way.

Wednesday, May 14, 2014

GA-P35-DS3R fan control

The Gigabyte GA-P35-DS3R motherboard has a IT8718F chip. It can control the speed of 3 fans via PWM and monitor the speed of 5 fans. The chip also has 3 thermal sensor inputs, which can be read by software. The SmartGuardian feature allows any thermal sensor input to be used to automatically control any fan without software intervention. Fan speeds can also be controlled from software. A PDF datasheet is available.

The BIOS has options to enable CPU fan speed control, and to use voltage or PWM to control the fan. I'm using voltage. The stock Q6600 fan has a 4 pin connector and supports PWM, but PWM causes noise. Voltage can control the speed just as well without the noise.

The BIOS programs the SmartGuardian feature to control CPU fan speed, but it doesn't provide any options for changing that configuration. Both Linux and SpeedFan support the IT8718F chip, but neither can program the SmartGuardian feature. SpeedFan only has an option in the Advanced tab to switch a fan from SmartGuardian to software control, which allows SpeedFan to control its speed. At least for the second PWM output, SpeedFan may not properly re-enable SmartGuardian.

An 8718fans program allows changing of SmartGuardian and other fan-related settings in the chip. It also allows viewing of current settings.

This GA-P35-DS3L information seems similar or identical to the GA-P35-DS3R. CPU fan speed is measured via the first fan sensor, and controlled via the first PWM output. That page claims that the first PWM output controls CPU fan voltage and the third output controls CPU fan PWM, which I didn't test. The second fan output controls voltage on SYS_FAN2, the 4 pin fan connector near the DIMMs and 24 pin power connector.

The BIOS sets up CPU fan control by using the second temperature sensor to control the first PWM output. This is a sensor at the CPU, but not one of the internal core temperature sensors that can be seen in programs like Core Temp. The IT8718F chip cannot use such sensors, because they can only be read by software running on the CPU. The second sensor measures temperatures which are about 10°C colder than the cores. According to 8718fans, full fan speed would be reached at 66°C, which probably corresponds to core temperatures near 76°C.

The BIOS also sets up sensor one to control PWM output two with the same settings. This is probably not reasonable for a case fan, because sensor one isn't at a particularly hot location. Its normal temperature is near 40°C, and if it reached 66°C, hotter areas would overheat.

The IT8718F SmartGuardian algorithm uses a slope, essentially just setting fan speed based on a linear relationship with temperature with some smoothing features. This means temperature depends on load, rather than being controlled to a particular level. If a certain fan speed corresponds to a certain temperature at a certain CPU load and CPU load increases, temperature increases until a new equilibrium is found, with a higher temperature and higher fan speed.

I'm now using SpeedFan to control a case fan, but still letting SmartGuardian control the CPU fan. Maybe I will inject some code into the MBR or elsewhere to set up SmartGuardian for the case fan, because I perfer not depending on an application for fan speed control.

The BIOS has options to enable CPU fan speed control, and to use voltage or PWM to control the fan. I'm using voltage. The stock Q6600 fan has a 4 pin connector and supports PWM, but PWM causes noise. Voltage can control the speed just as well without the noise.

The BIOS programs the SmartGuardian feature to control CPU fan speed, but it doesn't provide any options for changing that configuration. Both Linux and SpeedFan support the IT8718F chip, but neither can program the SmartGuardian feature. SpeedFan only has an option in the Advanced tab to switch a fan from SmartGuardian to software control, which allows SpeedFan to control its speed. At least for the second PWM output, SpeedFan may not properly re-enable SmartGuardian.

An 8718fans program allows changing of SmartGuardian and other fan-related settings in the chip. It also allows viewing of current settings.

This GA-P35-DS3L information seems similar or identical to the GA-P35-DS3R. CPU fan speed is measured via the first fan sensor, and controlled via the first PWM output. That page claims that the first PWM output controls CPU fan voltage and the third output controls CPU fan PWM, which I didn't test. The second fan output controls voltage on SYS_FAN2, the 4 pin fan connector near the DIMMs and 24 pin power connector.

The BIOS sets up CPU fan control by using the second temperature sensor to control the first PWM output. This is a sensor at the CPU, but not one of the internal core temperature sensors that can be seen in programs like Core Temp. The IT8718F chip cannot use such sensors, because they can only be read by software running on the CPU. The second sensor measures temperatures which are about 10°C colder than the cores. According to 8718fans, full fan speed would be reached at 66°C, which probably corresponds to core temperatures near 76°C.

The BIOS also sets up sensor one to control PWM output two with the same settings. This is probably not reasonable for a case fan, because sensor one isn't at a particularly hot location. Its normal temperature is near 40°C, and if it reached 66°C, hotter areas would overheat.

The IT8718F SmartGuardian algorithm uses a slope, essentially just setting fan speed based on a linear relationship with temperature with some smoothing features. This means temperature depends on load, rather than being controlled to a particular level. If a certain fan speed corresponds to a certain temperature at a certain CPU load and CPU load increases, temperature increases until a new equilibrium is found, with a higher temperature and higher fan speed.

I'm now using SpeedFan to control a case fan, but still letting SmartGuardian control the CPU fan. Maybe I will inject some code into the MBR or elsewhere to set up SmartGuardian for the case fan, because I perfer not depending on an application for fan speed control.

Tuesday, May 13, 2014

A DIMM which won't work with any other DIMMs in the same channel

Since I first got it, I used my Gigabyte GA-P35-DS3R motherboard with 2 GB of DDR2 RAM. This was more than enough at first, but now it results in too much disk access even when only running KDE and Firefox.

The old RAM is an OCZ2G8002GK DDR2-800 OCZ Gold 2*1GB 5-5-5-15 kit consisting of two OCZ28001G modules. I was running it at stock speeds and voltages, and it seemed perfectly stable, never producing any errors in tests. I concluded that 4GB would probably be enough, but I chose to upgrade using 2*2GB for two reasons: I would have 4GB even if I can't get the kits working together, and it's always better to have more memory than you think you need. I found a really good deal on eBay for OCZ2P8004GK DDR2-800 OCZ Platinum 2*2GB, consisting of two OCZ2P8002G modules.

When I put in the new RAM together with the old RAM, I got a hang at the initial graphical BIOS screen, but I could boot if I only put in the new RAM. At first, this seemed like a compatibility problem, maybe because the old RAM was required 1.8V and the new RAM required 2.1V. I had forgotten about OCZ Platinum requiring 2.1V, and that requirement wasn't stated anywhere on the eBay item page or the labels on RAM photos. I became skeptical when I saw that the old and new kits both worked alone at 5-5-5-15 timings, at either normal voltage or 2.1V. They only failed to work together.

Then I tried relaxing various primary and secondary timings and reducing the frequency. It seems the OCZ28001G modules couldn't handle CL6, but I could relax all the other timings. Nothing helped. In most cases, my computer would power off and back on twice and then hang. That seemed to be the motherboard's attempt to switch to more conservative settings. I assume it is meant to recover from a failed overclock, but it never managed to recover from this. I would have to remove a DIMM to get into BIOS setup and change settings for another attempt. Early on in this process I removed the hard drive so it doesn't get subjected to all these power cycles. Eventually I was forced to give up because I couldn't imagine what else I could change.

I tried another experiment, putting the old RAM in the slots closest to the CPU, and the new RAM in the slots further away. The intention was to put the old RAM in one channel and the new RAM in another channel, in case they were incompatible in the same channel. This configuration allowed me to boot, but caused lots Memtest86+ errors past 4 GB. According to DMI data, this address was in the middle of one of the new DIMMs. I didn't conclude anything based on this, because I didn't know if the configuration was supposed to work, and because it was weird to see errors start in the middle of a DIMM.

Later, I was inspired to try yet another experiment: two DIMMs in one channel, with nothing in the other channel. This would allow more possibilities with only 4 DIMMs. I found that one of the DIMMs wouldn't work with any other DIMM in the same channel, but the other DIMMs would work together. This finally made it seem like one DIMM is defective.

After getting a new G.Skill F2-6400CL5D-4GBPQ set, the suspect DIMM wouldn't work with either of those in the same channel, but the other OCZ2P8002G DIMM worked fine as part of a 2*2+1*1 GB DDR2-800 5-5-5-15 configuration with one of the new G.Skill DIMMs. This seems to confirm that one OCZ2P8002G DIMM is defective.

It's surprising that a DIMM can be bad in a way that it passes tests if alone in a channel but fails when there is another DIMM in the channel. However, it makes sense. Diagnostic programs can only tell you if the memory subsystem of that computer is reliably storing and retrieving data. They can't tell you if a DIMM is meeting its electronic specifications.

The old RAM is an OCZ2G8002GK DDR2-800 OCZ Gold 2*1GB 5-5-5-15 kit consisting of two OCZ28001G modules. I was running it at stock speeds and voltages, and it seemed perfectly stable, never producing any errors in tests. I concluded that 4GB would probably be enough, but I chose to upgrade using 2*2GB for two reasons: I would have 4GB even if I can't get the kits working together, and it's always better to have more memory than you think you need. I found a really good deal on eBay for OCZ2P8004GK DDR2-800 OCZ Platinum 2*2GB, consisting of two OCZ2P8002G modules.

When I put in the new RAM together with the old RAM, I got a hang at the initial graphical BIOS screen, but I could boot if I only put in the new RAM. At first, this seemed like a compatibility problem, maybe because the old RAM was required 1.8V and the new RAM required 2.1V. I had forgotten about OCZ Platinum requiring 2.1V, and that requirement wasn't stated anywhere on the eBay item page or the labels on RAM photos. I became skeptical when I saw that the old and new kits both worked alone at 5-5-5-15 timings, at either normal voltage or 2.1V. They only failed to work together.

Then I tried relaxing various primary and secondary timings and reducing the frequency. It seems the OCZ28001G modules couldn't handle CL6, but I could relax all the other timings. Nothing helped. In most cases, my computer would power off and back on twice and then hang. That seemed to be the motherboard's attempt to switch to more conservative settings. I assume it is meant to recover from a failed overclock, but it never managed to recover from this. I would have to remove a DIMM to get into BIOS setup and change settings for another attempt. Early on in this process I removed the hard drive so it doesn't get subjected to all these power cycles. Eventually I was forced to give up because I couldn't imagine what else I could change.

I tried another experiment, putting the old RAM in the slots closest to the CPU, and the new RAM in the slots further away. The intention was to put the old RAM in one channel and the new RAM in another channel, in case they were incompatible in the same channel. This configuration allowed me to boot, but caused lots Memtest86+ errors past 4 GB. According to DMI data, this address was in the middle of one of the new DIMMs. I didn't conclude anything based on this, because I didn't know if the configuration was supposed to work, and because it was weird to see errors start in the middle of a DIMM.

Later, I was inspired to try yet another experiment: two DIMMs in one channel, with nothing in the other channel. This would allow more possibilities with only 4 DIMMs. I found that one of the DIMMs wouldn't work with any other DIMM in the same channel, but the other DIMMs would work together. This finally made it seem like one DIMM is defective.

After getting a new G.Skill F2-6400CL5D-4GBPQ set, the suspect DIMM wouldn't work with either of those in the same channel, but the other OCZ2P8002G DIMM worked fine as part of a 2*2+1*1 GB DDR2-800 5-5-5-15 configuration with one of the new G.Skill DIMMs. This seems to confirm that one OCZ2P8002G DIMM is defective.

It's surprising that a DIMM can be bad in a way that it passes tests if alone in a channel but fails when there is another DIMM in the channel. However, it makes sense. Diagnostic programs can only tell you if the memory subsystem of that computer is reliably storing and retrieving data. They can't tell you if a DIMM is meeting its electronic specifications.

Thursday, May 08, 2014

Integrated heat spreader thermal contact failure

I recently upgraded an old PC to a Manchester socket 939 Athlon 4200+. After booting into Stresslinux, I ran mprime (the Linux version of Prime95) to check stability and temperatures. I didn't encounter any errors, but after a few minutes, core temperatures rose past 70, and approached 80. That's bad in general and especially bad for that CPU, so I had to cut power.

After removing the heat sink, the paste application seemed fine. I tried applying paste several times, and even got some new Zalman ZM-STG2 paste, but nothing helped. I couldn't even get anything as good as the first result.

Eventually I found some message board posts about decapping, the thermal paste below the CPU integrated heat spreader (lid), and how that paste can fail. The fact that the heat sink wasn't even warm when the CPU cores approached 80, and the paste between the heat spreader and CPU seemed fine afterwards made this seem like a probable explanation.

I first attempted to cut off the integrated heat spreader (IHS) with a utility knife. This didn't work because the blade was too thick and it couldn't fit into the narrow gap between the CPU circuit board and IHS. Then I pried apart a disposable razor and got one of the blades out. It's very thin and sharp, and it fit into the gap and cut nicely. The only difficulty was that it's also highly flexible, so it can cut the circuit board. Here are pictures of the decapped CPU:

The black material that was holding the IHS is like rubber. Note that it wasn't providing a hermetic seal; there is a gap on the left of the CPU. The brown material is remnants of brasso which I used to try to lap the IHS before. The IHS was definitely slightly concave, but that wasn't the problem. The grey thermal paste was entirely dry and kind of like silicone rubber, but much easier to remove. My theory is that it works fine even when dry, and that problems happen due to the force used to separate the heat sink from the IHS. The black rubber holding the IHS allows some movement. If the dry thermal paste inside breaks apart due to that, it can't re-establish good contact.

I didn't want to run the CPU decapped because that would require modifying the motherboard, and maybe the heat sink retention. The plastic frame surrounding the socket prevents the heat sink from getting low enough to make good contact with the chip, and even if that was cut away, I'm not sure if the heat sink retention would provide enough force when the heat sink sits lower. Also, the IHS seems to be plated copper, and it might actually help with heat transfer to the stock aluminum heat sink. I just shaved down the black rubber a bit, cleaned off old paste, added new paste, and reassembled without attaching the IHS to the CPU.

After all this, running mprime on both cores resulted in temperatures of 45°C and below. This was with the stock cooler for an Athlon 64+ 3500 Newcastle (also 89W TDP) and Zalman ZM-STG2 paste.

After removing the heat sink, the paste application seemed fine. I tried applying paste several times, and even got some new Zalman ZM-STG2 paste, but nothing helped. I couldn't even get anything as good as the first result.

Eventually I found some message board posts about decapping, the thermal paste below the CPU integrated heat spreader (lid), and how that paste can fail. The fact that the heat sink wasn't even warm when the CPU cores approached 80, and the paste between the heat spreader and CPU seemed fine afterwards made this seem like a probable explanation.

I first attempted to cut off the integrated heat spreader (IHS) with a utility knife. This didn't work because the blade was too thick and it couldn't fit into the narrow gap between the CPU circuit board and IHS. Then I pried apart a disposable razor and got one of the blades out. It's very thin and sharp, and it fit into the gap and cut nicely. The only difficulty was that it's also highly flexible, so it can cut the circuit board. Here are pictures of the decapped CPU:

The black material that was holding the IHS is like rubber. Note that it wasn't providing a hermetic seal; there is a gap on the left of the CPU. The brown material is remnants of brasso which I used to try to lap the IHS before. The IHS was definitely slightly concave, but that wasn't the problem. The grey thermal paste was entirely dry and kind of like silicone rubber, but much easier to remove. My theory is that it works fine even when dry, and that problems happen due to the force used to separate the heat sink from the IHS. The black rubber holding the IHS allows some movement. If the dry thermal paste inside breaks apart due to that, it can't re-establish good contact.

I didn't want to run the CPU decapped because that would require modifying the motherboard, and maybe the heat sink retention. The plastic frame surrounding the socket prevents the heat sink from getting low enough to make good contact with the chip, and even if that was cut away, I'm not sure if the heat sink retention would provide enough force when the heat sink sits lower. Also, the IHS seems to be plated copper, and it might actually help with heat transfer to the stock aluminum heat sink. I just shaved down the black rubber a bit, cleaned off old paste, added new paste, and reassembled without attaching the IHS to the CPU.

After all this, running mprime on both cores resulted in temperatures of 45°C and below. This was with the stock cooler for an Athlon 64+ 3500 Newcastle (also 89W TDP) and Zalman ZM-STG2 paste.

Subscribe to:

Posts (Atom)